Would you like to have over 700MB/sec throughput between your PCs at home for under €110? That’s like a full CD’s worth of data every second! If you do, then read on….

–edit–

Since this article was originally written, I’ve found the real-world throughput of infiniband from a windows machine and an ubuntu machine gives me a max of 135MB/sec, just under twice my 1gbps ethernet (75MB.sec). Thats with a raid array capable of 350MB/sec on the linux side, feeding a samba link to the windows machine at 95% CPU. So, it falls a lot short of the desired 700MB/sec that I thought may be possible. It’s not possible with IP over Infininband. And iSER isnt available on windows, so no SRP targets could be used, which uses RDMA. So a whole lotta research leading to block walls and 135MB/sec max.

—end edit—

With the increasing amout of data that I have to manage on my computers at home, I started looking into a faster way of moving data around the place. I started with a RAID array in my PC, which gives me read-write speeds of 250MB/sec. Not being happy with that, I looked a creating a bigger external array, with more disks, for faster throughput. I happened to have a decent linux box sitting there doing very little. It had a relatively recent motherboard , and 8 SATA connectors. But no matter how fast I got the drives in that linux box to go, I’d always be limited by the throughput of the 1Gb ethernet network between the machines, so I researched several different ways of inter-PC communication that might break the 1gbps barrier. The 1GB ethernet was giving me about 75MB/sec throughput.

The first I looked at was USB 3.0 (5 gbit/s). While that’s very good for external hard drives, there didnt seem to be a decent solution out there for allowing multiple drives to be added together to increase throughput. We are now starting to see raid boxes appear with USB3.0 interfaces, but they are still quite expensive. To connect my existing linux box to my windows desktop, I’d need a card with a USB 3.0 slave port so that the external array would look like one big drive, and max out the 5Gbps bandwidth of a USB 3.0 link . However, these do not seem to exist, so I moved onto the next option.

Then I moved on to 10G Ethernet (10 gbit/s). One look at the prices here and I immediately ruled it out. Several hundred Euro for a single adapter.

Fibre channel (2-8 gbit/s). Again the pricing was prohibitive, especially for the higher throughput cards. Even the 2Gbps cards were expensive, and would not give me much of a boost over 1Gbps ethernet.

Then came Infiniband (10-40 gbit/s). I came across this while looking through the List of Device Bit Rates page on Wikipedia. I had heard of it as an interconnect in cluster environments and high-end data-centres. I also assumed that the price would be prohibitive. A 10G adapter would theoretically give up to a Gigabyte per second throughput between the machines. However, I wasn’t ruling it out until I had a look on eBay at a few prices. To my surprise, there was a whole host of adapters available ranging from several hundred dollars down to about fifty dollars. $50? for a 10Gig adapter? Surely this couldn’t be right. I looked again, and I spotted some dual port Mellanox MHEA28-XTC cards at $35.99. This worked out at about €27 per adapter, plus €25 shipping. Incredible, if I could get it to work. I’d also read that it is possible to use a standard infiniband cable to directly connect two machines together without a switch, saving me about €700 in switch costs. If I wanted to bring another machine into the Infiniband fabric, though, I’d have to bear that cost. For the moment, two machines directly connected was all I needed.

With a bit more research, I found that drivers for the card were available for Windows 7 and Linux from OpenFabrics.org, so I ordered 2 cards from the U.S. and a cable from Hong Kong.

About 10 days later the adapters arrived. I installed one adapter in the Windows 7 machine. Windows initially failed to find a driver, so I then went on the OpenFabrics.org website and downloaded OFED_2-3_win7_x64.zip. After installation I had two new network connections available in windows (the adapter was dual-port), ready for me to connect to the other machine.

Next I moved onto the Linux box. I won’t even start with the hassle I had to install the card in my linux box. After days of research, driver installation, kernel re-compilation, driver re-compilation, etc. etc., etc., etc., I eventually tried swapping the slot that I had the card plugged into. Low and below, the f&*cking thing worked. So, my mother board has two PCI-Ex16 slots, and the infiniband adapter would work in one, but not in the other. Who would have thought. All I had to do then was assign an IP address to it. –EDIT– here’s a quick HOWTO on getting the fabric up on Ubuntu 10.10. About 10 minutes should get it working – http://davidhunt.ie/wp/?p=375 –EDIT–

Without a cable (it still had not arrived from Hong Kong), all I could do was sit there and wait until it arrived to test the setup. Would the machines be able to feed the cards fast enough to get a decent throughput? On some forums I’d seen throughput tests of 700MB/sec. Would I get anywhere close to that with a 3GHz dual core athlon to a 3GHz i7 950?

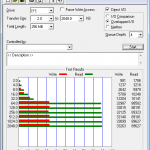

A few days later, the cable arrived. I connected the cable into each machine, and could immediately send pings between the machines. I’d previously assigned static IP addresses to the infiniband ports on each machine. I wasn’t able to run “netperf”, as it didn’t see the cards as something it could put traffic through. So I upgraded the firmware on the cards, which several forums said would improve throughput and compatibility. Iwas then able to run netperf, with the following results:

root@raid:~# netperf -H 10.4.12.1

TCP STREAM TEST from 0.0.0.0 (0.0.0.0)

port 0 AF_INET to 10.4.12.1 (10.4.12.1)

port 0 AF_INET : demo

Recv Send Send

Socket Socket Message Elapsed

Size Size Size Time Throughput

bytes bytes bytes secs. 10^6bits/sec

87380 16384 16384 10.00 7239.95

That’s over 7 gigabits/sec, or over 700MB/sec throughput between the two machines!

So, I now have an Infiniband Fabric working at home, with over 7 gigabit throughput between PCs. The stuff of high-end datacentres in my back room. The main thing is that you don’t need a switch, so a PC to PC 10-gigabit link CAN be achieved for under €110! Here’s the breakdown:

2 x Mellanox MHEA28-XTC infiniband HCA’s @ $34.99 + shippping = $113 (€85)

1 x 3m Molex SFF-8470 infiniband cable incl shipping = $29 (€22)

Total: $142 (€107)

The next step is to set up a raid array with several drives and stripe them so they all work in parallel, and maybe build it in such a way if one or two drives fail, it will still be recoverable (raid 5/6). More to come on that soon.

References:

http://hardforum.com/showthread.php?p=1036510049

http://www.zdnet.com/blog/storage/build-a-10-gbit-home-network-for-1100/284

http://www.gossamer-threads.com/lists/drbd/users/19594

http://www.mellanox.com

http://www.openfabrics.org

![]() I came across an interesting looking app in the app store called Photosmith. It’s described as “the iPad mobile companion for Adobe Lightroom®”. At €14.49, it was quite an expensive app, but since I’m interested in all types of tethering, especially those that go with my camera and software I’ve already purchased, such as Lightroom 3. The only piece of hardware that I was missing was the iPad Camera Connection kit, so I went into my local Apple store and picked one up for €29.

I came across an interesting looking app in the app store called Photosmith. It’s described as “the iPad mobile companion for Adobe Lightroom®”. At €14.49, it was quite an expensive app, but since I’m interested in all types of tethering, especially those that go with my camera and software I’ve already purchased, such as Lightroom 3. The only piece of hardware that I was missing was the iPad Camera Connection kit, so I went into my local Apple store and picked one up for €29.

A

A